2021-09-07: New version (1.4) now works on M1 macs.

Performance improvements released for both iOS and MacOS.

Follow the app on Facebook, Twitter!

Subscribe to email list to hear about the future developments.

See live sound with high-precision details across both, time and frequency domains!

iOS (iPhone, iPad) or MacOS (Desktop):

You can use the VSound app:

- to make visualisations for music videos

- to enjoy various visualisations while listening to music

- to learn tonal languages: see how the tones look, and try to mimic

- to practice singing and try to hit specific notes, octaves, jump intervals

- as a visual aid for people who cannot perceive certain frequencies

- analyse unusual frequencies in sound and around you

Feel free to contact me at vsoundapp@gmail.com (or visit akuz.me)

The science bit

Feel free to skip this, and scroll down below for some awesome visualisations!

If you have ever tried to use Fast Fourier Transform (FFT) to analyse the frequency content (notes) of music, you would have noticed that you cannot really see all the details of the sound. This has to do with the fact that FFT is designed to detect frequencies in a fixed "window" (time period) of sound. In order to analyse live streaming sound, FFT needs to be adapted by analysing small, partially overlapping windows of sound. This leads to quantum-physics-like breakdown of the algorithm: if you use windows that are too short, you loose the ability to detect low frequencies (you just see a lot of noise); and if you use the windows that are too long, you loose the information about when specific note (frequency) was actually playing, which is especially important for the higher notes. Moreover, FFT also suffers from so-called frequency leakage, where it is not really sure about what exact note is playing, if it does not fall exactly on the set of notes it can detect, and you see the detected note smudged across a range of nearby notes.

The novel frequency detection algorithm used in the app allows circumventing most (if not all) of these problems:

- it's completely automatic, no parameters to configure, i.e.:

- you do not need to choose a "window" size for breaking down sound into small pieces

- there is a "high-precision" option, which allows dealing with frequency leakage (smudging)

This allows high-quality real-time detection of frequencies (notes) without configuring anything.

With such a tool, why not have fun with different visualisations!

Visualisations

The app implements several visualisations, which you can watch below without installing the app. These were pre-recorded during the app development and posted in Instagram (in my personal account). See user guide for full details on how to select different visualisations while you are using the app.

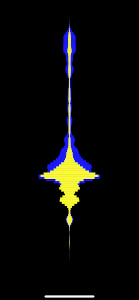

Linear Visualisation (#1)

Music: @symbolico — please follow the artist!

Rectangles visualisation (#2)

Music: @drrtywulvz — please follow the artist!

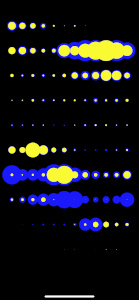

Bubbles visualisation (#3)

Music: @tambour_battant_tbbt — please follow the artist!

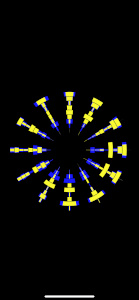

Circle visualisation (#4)

Music: @symbolico — please follow the artist!

This is it, for now!

The future

This app is a side-product of a larger project that I have been working on... The current state of artificial intelligence (AI) in music is lagging behind AI for visual information by a mile. In the last few years these has been an immence progress in classifying the images, detection of objects in images, generation of "fake" images and art, and even fake videos! Although there have been some impressive developments for sound as well (namely WaveNet, Magenta, AIVA), it seems that we are much further away from generating good AI music than from generating fake images of cats...

I am particularly interested in generating rhythmic dance music. This initially seems like it could be a simpler task than generating a symphony, for example, since dance music is generally considered to be "simpler" and more structured. However, behind simplicity hides intricate organisation of patterns, repeated in fractal-like structures spanning from fractions of seconds to several minutes. It seems that this intricate structure needs a special approach to analysing (and learning to generate) this kind of music. This is an on-going weekend-time project that I entertain myself with. I am happy to discuss!

In the mean time, I'll probably also work on better visualisations, perhaps even some 3D ones, which would use the same high-precision algorithm for frequency (note) detection.

Subscribe to email list to hear about the future developments.